To be fair, I knew a lot of people who struggled with word problems in math class.

I stand with chat-gpt on this. Whoever created these double letters is the idiot here.

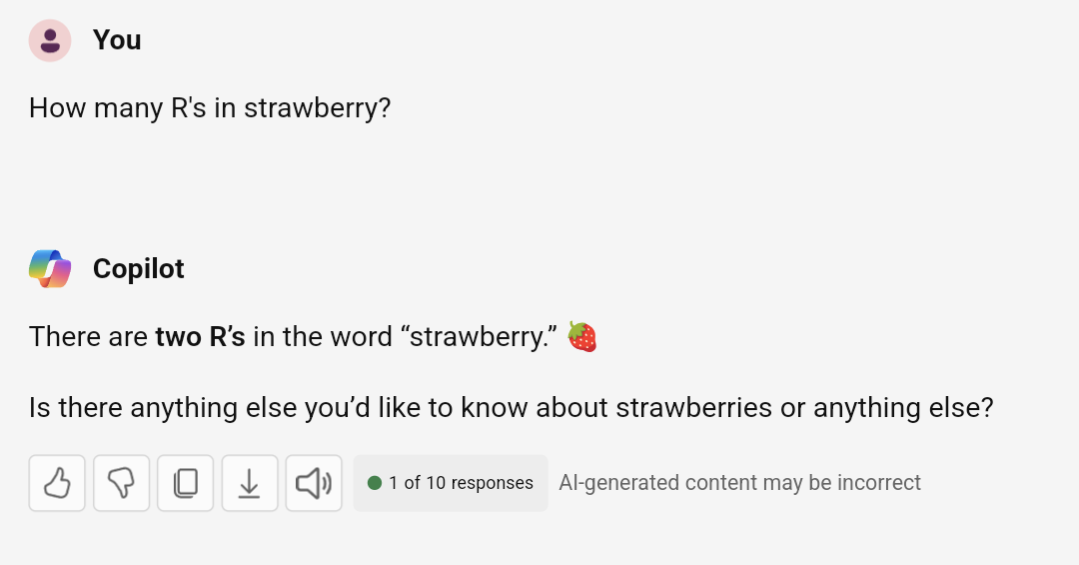

I tried it with my abliterated local model, thinking that maybe its alteration would help, and it gave the same answer. I asked if it was sure and it then corrected itself (maybe reexamining the word in a different way?) I then asked how many Rs in "strawberries" thinking it would either see a new word and give the same incorrect answer, or since it was still in context focus it would say something about it also being 3 Rs. Nope. It said 4 Rs! I then said "really?", and it corrected itself once again.

LLMs are very useful as long as know how to maximize their power, and you don't assume whatever they spit out is absolutely right. I've had great luck using mine to help with programming (basically as a Google but formatting things far better than if I looked up stuff), but I've found some of the simplest errors in the middle of a lot of helpful things. It's at an assistant level, and you need to remember that assistant helps you, they don't do the work for you.

Is there anything else or anything else you would like to discuss? Perhaps anything else?

Anything else?

Tnf, this is the kind of answer a person might give if you asked them the question randomly.

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics