374

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

this post was submitted on 03 Oct 2024

374 points (94.7% liked)

Technology

60123 readers

3153 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 2 years ago

MODERATORS

I believe accessibility is the part that makes LLMs helpful, when they are given an easy enough task to verify. Being able to ask a thing that resembles a human what you need instead of reading through possibly a textbook worth of documentation to figure out what is available and making it fit what you need is fairly powerful.

If it were actually capable of reasoning, I'd compare it to asking a linguist the origin of a word vs looking it up in a dictionary. I don't think anyone disagrees that the dictionary would be more likely to be fully accurate, and also I personally would just prefer to ask the person who seemingly knows and, if I have reason to doubt, then go back and double-check.

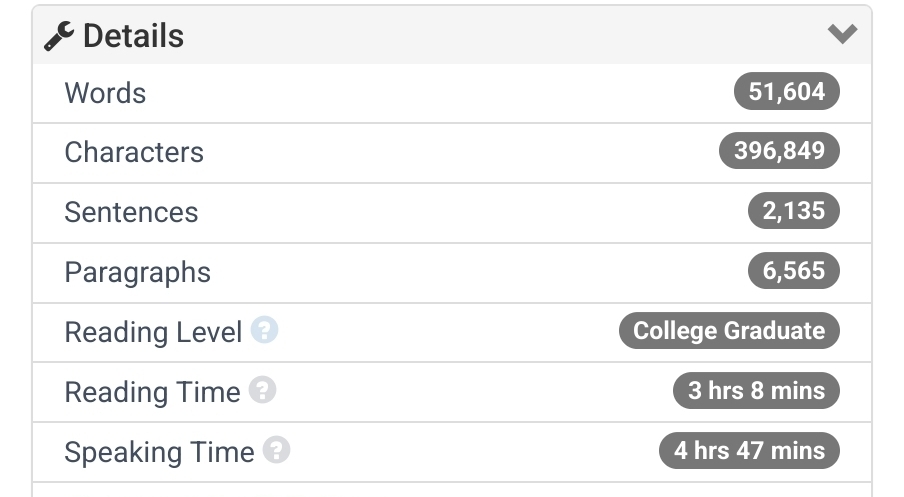

Here's the manpage for bash's statistics from wordcounter.net:

Perhaps LLMs can be used to gain some working vocabulary in a subject you aren't familiar with. I'd say anything more than that is a gamble, since there's no guarantee that hallucinations have not taken place. Remember, that to spot incorrect info, you need to already be well acquainted with the matter at hand, which is at the polar opposite of just starting to learn the basics.

I do try to keep the "unknown unknowns" problem in mind when I use it, and I've been using it far less as I latched on to how OOP actually works and built up the lexicon and my own preferences. I try to only ask it for high-level stuff that I can then use to search the wider (hopefully more human) internet more traditionally with. I fully appreciate that it's nothing more than a very incredibly fancy auto-completion engine and the basic task of auto-complete just so happens to appear intelligent as it gets better and more complex but continues to lack any form of real logical thoughts.