It would seem that I have far too much time on my hands. After the post about a Star Trek "test", I started wondering if there could be any data to back it up and... well here we go:

Those Old Scientists

| Name |

Total Lines |

Percentage of Lines |

| KIRK |

8257 |

32.89 |

| SPOCK |

3985 |

15.87 |

| MCCOY |

2334 |

9.3 |

| SCOTT |

912 |

3.63 |

| SULU |

634 |

2.53 |

| UHURA |

575 |

2.29 |

| CHEKOV |

417 |

1.66 |

The Next Generation

| Name |

Total Lines |

Percentage of Lines |

| PICARD |

11175 |

20.16 |

| RIKER |

6453 |

11.64 |

| DATA |

5599 |

10.1 |

| LAFORGE |

3843 |

6.93 |

| WORF |

3402 |

6.14 |

| TROI |

2992 |

5.4 |

| CRUSHER |

2833 |

5.11 |

| WESLEY |

1285 |

2.32 |

Deep Space Nine

| Name |

Total Lines |

Percentage of Lines |

| SISKO |

8073 |

13.0 |

| KIRA |

5112 |

8.23 |

| BASHIR |

4836 |

7.79 |

| O'BRIEN |

4540 |

7.31 |

| ODO |

4509 |

7.26 |

| QUARK |

4331 |

6.98 |

| DAX |

3559 |

5.73 |

| WORF |

1976 |

3.18 |

| JAKE |

1434 |

2.31 |

| GARAK |

1420 |

2.29 |

| NOG |

1247 |

2.01 |

| ROM |

1172 |

1.89 |

| DUKAT |

1091 |

1.76 |

| EZRI |

953 |

1.53 |

Voyager

| Name |

Total Lines |

Percentage of Lines |

| JANEWAY |

10238 |

17.7 |

| CHAKOTAY |

5066 |

8.76 |

| EMH |

4823 |

8.34 |

| PARIS |

4416 |

7.63 |

| TUVOK |

3993 |

6.9 |

| KIM |

3801 |

6.57 |

| TORRES |

3733 |

6.45 |

| SEVEN |

3527 |

6.1 |

| NEELIX |

2887 |

4.99 |

| KES |

1189 |

2.06 |

Enterprise

| Name |

Total Lines |

Percentage of Lines |

| ARCHER |

6959 |

24.52 |

| T'POL |

3715 |

13.09 |

| TUCKER |

3610 |

12.72 |

| REED |

2083 |

7.34 |

| PHLOX |

1621 |

5.71 |

| HOSHI |

1313 |

4.63 |

| TRAVIS |

1087 |

3.83 |

| SHRAN |

358 |

1.26 |

Discovery

Important Note: As the source material is incomplete for Discovery, the following table only includes line counts from seasons 1 and 4 along with a single episode of season 2.

| Name |

Total Lines |

Percentage of Lines |

| BURNHAM |

2162 |

22.92 |

| SARU |

773 |

8.2 |

| BOOK |

586 |

6.21 |

| STAMETS |

513 |

5.44 |

| TILLY |

488 |

5.17 |

| LORCA |

471 |

4.99 |

| TARKA |

313 |

3.32 |

| TYLER |

300 |

3.18 |

| GEORGIOU |

279 |

2.96 |

| CULBER |

267 |

2.83 |

| RILLAK |

205 |

2.17 |

| DETMER |

186 |

1.97 |

| OWOSEKUN |

169 |

1.79 |

| ADIRA |

154 |

1.63 |

| COMPUTER |

152 |

1.61 |

| ZORA |

151 |

1.6 |

| VANCE |

101 |

1.07 |

| CORNWELL |

101 |

1.07 |

| SAREK |

100 |

1.06 |

| T'RINA |

96 |

1.02 |

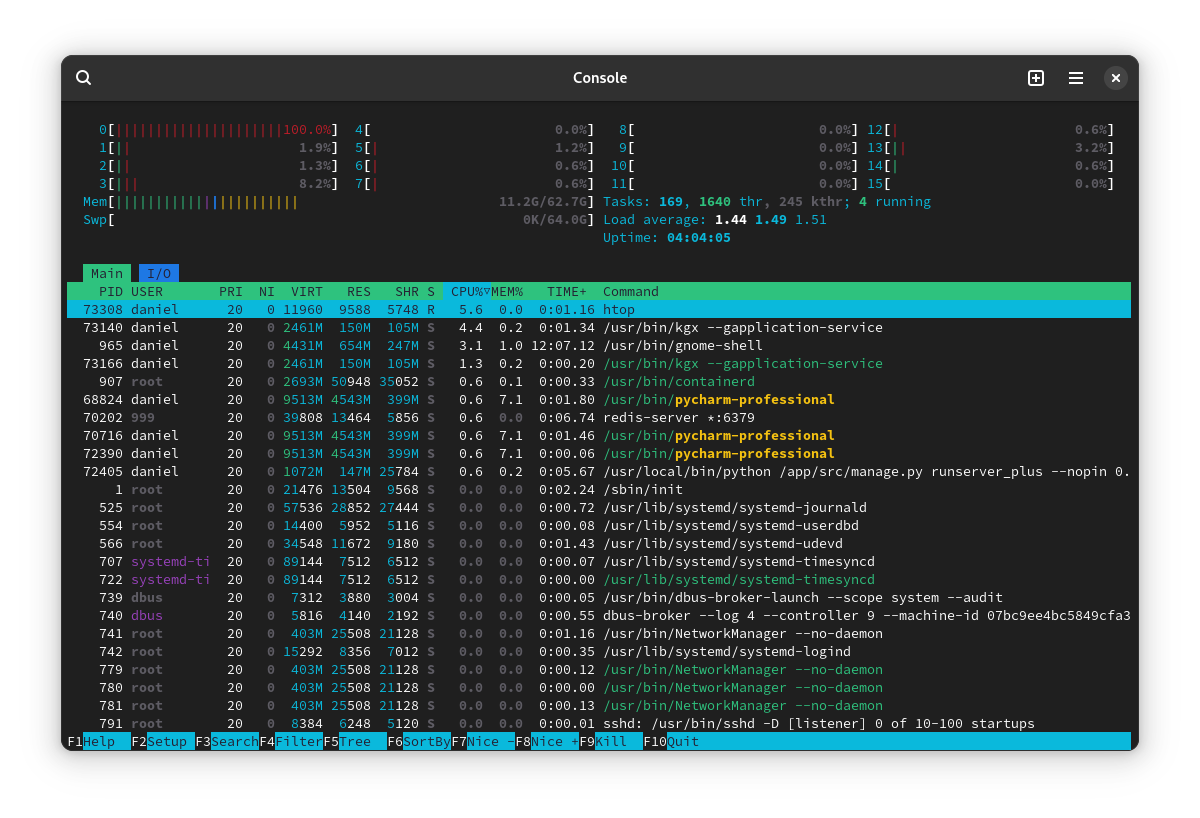

If anyone is interested, here's the (rather hurried, don't judge me) Python used:

#!/usr/bin/env python

#

# This script assumes that you've already downloaded all the episode lines from

# the fantastic chakoteya.net:

#

# wget --accept=html,htm --relative --wait=2 --include-directories=/STDisco17/ http://www.chakoteya.net/STDisco17/episodes.html -m

# wget --accept=html,htm --relative --wait=2 --include-directories=/Enterprise/ http://www.chakoteya.net/Enterprise/episodes.htm -m

# wget --accept=html,htm --relative --wait=2 --include-directories=/Voyager/ http://www.chakoteya.net/Voyager/episode_listing.htm -m

# wget --accept=html,htm --relative --wait=2 --include-directories=/DS9/ http://www.chakoteya.net/DS9/episodes.htm -m

# wget --accept=html,htm --relative --wait=2 --include-directories=/NextGen/ http://www.chakoteya.net/NextGen/episodes.htm -m

# wget --accept=html,htm --relative --wait=2 --include-directories=/StarTrek/ http://www.chakoteya.net/StarTrek/episodes.htm -m

#

# Then you'll probably have to convert the following files to UTF-8 as they

# differ from the rest:

#

# * Voyager/709.htm

# * Voyager/515.htm

# * Voyager/416.htm

# * Enterprise/41.htm

#

import re

from collections import defaultdict

from pathlib import Path

EPISODE_REGEX = re.compile(r"^\d+\.html?$")

LINE_REGEX = re.compile(r"^(?P<name>[A-Z']+): ")

EPISODES = Path("www.chakoteya.net")

DISCO = EPISODES / "STDisco17"

ENT = EPISODES / "Enterprise"

TNG = EPISODES / "NextGen"

TOS = EPISODES / "StarTrek"

DS9 = EPISODES / "DS9"

VOY = EPISODES / "Voyager"

NAMES = {

TOS.name: "Those Old Scientists",

TNG.name: "The Next Generation",

DS9.name: "Deep Space Nine",

VOY.name: "Voyager",

ENT.name: "Enterprise",

DISCO.name: "Discovery",

}

class CharacterLines:

def __init__(self, path: Path) -> None:

self.path = path

self.line_count = defaultdict(int)

def collect(self) -> None:

for episode in self.path.glob("*.htm*"):

if EPISODE_REGEX.match(episode.name):

for line in episode.read_text().split("\n"):

if m := LINE_REGEX.match(line):

self.line_count[m.group("name")] += 1

@property

def as_tablular_data(self) -> tuple[tuple[str, int, float], ...]:

total = sum(self.line_count.values())

r = []

for k, v in self.line_count.items():

percentage = round(v * 100 / total, 2)

if percentage > 1:

r.append((str(k), v, percentage))

return tuple(reversed(sorted(r, key=lambda _: _[2])))

def render(self) -> None:

print(f"\n\n# {NAMES[self.path.name]}\n")

print("| Name | Total Lines | Percentage of Lines |")

print("| ---------------- | :---------: | ------------------: |")

for character, total, pct in self.as_tablular_data:

print(f"| {character:16} | {total:11} | {pct:19} |")

if __name__ == "__main__":

for series in (TOS, TNG, DS9, VOY, ENT, DISCO):

counter = CharacterLines(series)

counter.collect()

counter.render()

A big problem here is a whole generation of developers who have learned to build stuff explicitly for AWS. If I ever inherit another Serverless project it'll be too soon.

Serverless, S3, SES, Cognito, and many many other tools are often tightly coupled to the application, so you get hooked on the "free" tier and can't extricate yourself later.

There's some hope here with Docker and Kubernetes, but a lot of companies (especially contractors) only know how to build exclusively on top of these AWS services, so if you're like most start-ups, contracting out first and second generations of your app, you can get committed to AWS for life.