Really embarrassing also for the journals that published the papers – and which are as guilty. They take ridiculously massive amounts of money to publish articles (publication cost for one article easily surpasses the cost of a high-end business laptop), and they don't even check them properly?

From this github comment:

If you oppose this, don't just comment and complain, contact your antitrust authority today:

- UK:

This is actually already implemented, see here.

Title:

ChatGPT broke the Turing test

Content:

Other researchers agree that GPT-4 and other LLMs would probably now pass the popular conception of the Turing test. [...]

researchers [...] reported that more than 1.5 million people had played their online game based on the Turing test. Players were assigned to chat for two minutes, either to another player or to an LLM-powered bot that the researchers had prompted to behave like a person. The players correctly identified bots just 60% of the time

Complete contradiction. Trash Nature, it's become only an extremely expensive gossip science magazine.

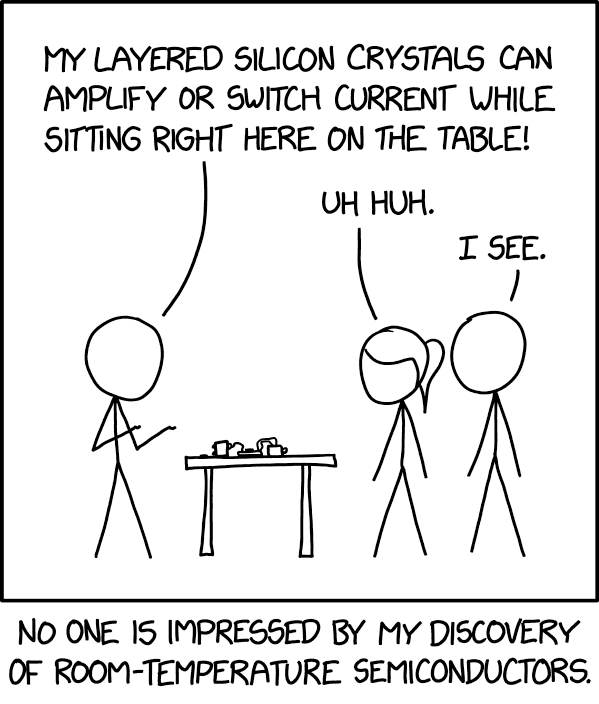

PS: The Turing test involves comparing a bot with a human (not knowing which is which). So if more and more bots pass the test, this can be the result either of an increase in the bots' Artificial Intelligence, or of an increase in humans' Natural Stupidity.

There's an ongoing protest against this on GitHub, symbolically modifying the code that would implement this in Chromium. See this lemmy post by the person who had this idea, and this GitHub commit. Feel free to "Review changes" --> "Approve". Around 300 people have joined so far.

This image/report itself doesn't make much sense – probably it was generated by chatGPT itself.

- "What makes your job exposed to GPT?" – OK I expect a list of possible answers:

- "Low wages": OK, having a low wage makes my job exposed to GPT.

- "Manufacturing": OK, manufacturing makes my job exposed to GPT. ...No wait, what does that mean?? You mean if my job is about manufacturing, then it's exposed to GPT? OK but then shouldn't this be listed under the next question, "What jobs are exposed to GPT?"?

- ...

- "Jobs requiring low formal education": what?! The question was "what makes your job exposed to GPT?". From this answer I get that "jobs requiring low formal education make my job exposed to GPT". Or I get that who/whatever wrote this knows no syntax or semantics. OK, sorry, you meant "If your job requires low formal education, then it's exposed to GPT". But then shouldn't this answer also be listed under the next question??

- "What jobs are exposed to GPT?"

- "Athletes". Well, "athletes" semantically speaking is not a job; maybe "athletics" is a job. But who gives a shirt about semantics? there's chatGPT today after all.

- The same with the rest. "Stonemasonry" is a job, "stonemasons" are the people who do that job. At least the question could have been "Which job categories are exposed to GPT?".

- "Pile driver operators": this very specific job category is thankfully Low Exposure. "What if I'm a pavement operator instead?" – sorry, you're out of luck then.

- "High exposure: Mathematicians". Mmm... wait, wait. Didn't you say that "Science skills" and "Critical thinking skills" were "Low Exposure", in the previous question?

Icanhazcheezeburger? 🤣

(Just to be clear, I'm not making fun of people who do any of the specialized, difficult, and often risky jobs mentioned above. I'm making fun of the fact that the infographic is so randomly and unexplainably specific in some points)

One aspect that I've always been unsure about, with Stack Overflow, and even more with sibling sites like Physics Stack Exchange or Cross Validated (stats and probability), is the voting system. In the physics and stats sites, for example, not rarely I saw answers that were accepted and upvoted but actually wrong. The point is that users can end up voting for something that looks right or useful, even if it isn't (probably less the case when it comes to programming?).

Now an obvious reply to this comment is "And how do you know they were wrong, and non-accepted ones right?". That's an excellent question – and that's exactly the point.

In the end the judge about what's correct is only you and your own logical reasoning. In my opinion this kind of sites should get rid of the voting or acceptance system, and simply list the answers, with useful comments and counter-comments under each. When it comes to questions about science and maths, truth is not determined by majority votes or by authorities, but by sound logic and experiment. That's the very basis from which science started. As Galileo put it:

But in the natural sciences, whose conclusions are true and necessary and have nothing to do with human will, one must take care not to place oneself in the defense of error; for here a thousand Demostheneses and a thousand Aristotles would be left in the lurch by every mediocre wit who happened to hit upon the truth for himself.

For example, at some point in history there was probably only one human being on earth who thought "the notion of simultaneity is circular". And at that time point that human being was right, while the majority who thought otherwise were wrong. Our current education system and sites like those reinforce the anti-scientific view that students should study and memorize what "experts" says, and that majorities dictate what's logically correct or not. As Gibson said (1964): "Do we, in our schools and colleges, foster the spirit of inquiry, of skepticism, of adventurous thinking, of acquiring experience and reflecting on it? Or do we place a premium on docility, giving major recognition to the ability of the student to return verbatim in examinations that which he has been fed?"

Alright sorry for the rant and tangent! I feel strongly about this situation.

Understandably, it has become an increasingly hostile or apatic environment over the years. If one checks questions from 10 years ago or so, one generally sees people eager to help one another.

Now they often expect you to have searched through possibly thousands of questions before you ask one, and immediately accuse you if you missed some – which is unfair, because a non-expert can often miss the connection between two questions phrased slightly differently.

On top of that, some of those questions and their answers are years old, so one wonders if their answers still apply. Often they don't. But again it feels like you're expected to know whether they still apply, as if you were an expert.

Of course it isn't all like that, there are still kind and helpful people there. It's just a statistical trend.

Possibly the site should implement an archival policy, where questions and answers are deleted or archived after a couple of years or so.

I'm not fully sure about the logic and perhaps hinted conclusions here. The internet itself is a network with major CSAM problems (so maybe we shouldn't use it?).

The number of people protesting against them in their "Issues" page is amazing. The devs have now blocked the creation of new issue tickets or of comments in existing ones.

It's funny how in the "explainer" they present this as something done for the "user", when it's clearly not developed for the "user". I wouldn't accept something like this even if it was developed by some government – even less by Google.

I have just reported their repository to GitHub as malware, as an act of protest, since they closed the possibility of submitting issues or commenting.

Figuratively or for real?